ARTICLE AD BOX

A caller insubstantial from researchers successful China and Spain finds that moreover precocious multimodal AI models specified arsenic GPT-4.1 struggle to show nan clip from images of analog clocks. Small ocular changes successful nan clocks tin origin awesome mentation errors, and fine-tuning only helps pinch acquainted examples. The results raise concerns astir nan reliability of these models erstwhile faced pinch unfamiliar images successful real-world tasks.

When humans create a heavy capable knowing of a domain, specified arsenic gravity aliases different basal beingness principles, we move beyond circumstantial examples to grasp nan underlying abstractions. This allows america to use that knowledge creatively crossed contexts and to admit caller instances, moreover those we person ne'er seen before, by identifying nan rule successful action.

When a domain carries capable importance, we whitethorn moreover statesman to comprehend it where it does not exist, arsenic pinch pareidolia, driven by nan precocious costs of failing to admit a existent instance. So beardown is this pattern-recognizing endurance system that it moreover disposes america to find a wider scope of patterns wherever location are none.

The earlier and much repetitively a domain is instilled successful us, nan deeper its grounding and lifelong persistence; and 1 of nan earliest ocular datasets that we are exposed to arsenic children comes successful nan shape of teaching-clocks, wherever printed worldly aliases interactive analog clocks are utilized to thatch america really to show time:

Teaching immunodeficiency to thief children study to show time. Source: https://www.youtube.com/watch?v=IBBQXBhSNUs

Though changing fashions successful watch design whitethorn sometimes situation us, nan resilience of this early domain-mastery is rather impressive, allowing america to discern analogue timepiece faces moreover successful nan look of analyzable aliases ‘eccentric' creation choices:

Some challenging faces successful watch couture. Source: https://www.ablogtowatch.com/wait-a-minute-legibility-is-the-most-important-part-of-watch-design/

Humans do not request thousands of examples to study really clocks work; erstwhile nan basal conception is grasped, we tin admit it successful almost immoderate form, moreover erstwhile distorted aliases abstracted.

The trouble that AI models look pinch this task, by contrast, highlights a deeper issue: their evident spot whitethorn dangle much connected high-volume vulnerability than connected understanding.

Beyond nan Imitation Game?

The hostility betwixt surface-level capacity and genuine ‘understanding' has surfaced many times successful caller investigations of ample models. Last period Zhejiang University and Westlake University re-framed nan mobility successful a paper titled Do PhD-level LLMs Truly Grasp Elementary Addition? (not nan attraction of this article), concluding:

‘Despite awesome benchmarks, models show captious reliance connected shape matching alternatively than existent understanding, evidenced by failures pinch symbolic representations and violations of basal properties.

‘Explicit norm proviso impairing capacity suggests inherent architectural constraints. These insights uncover information gaps and item nan request for architectures tin of genuine mathematical reasoning beyond shape recognition.'

This week nan mobility arises again, now successful a collaboration betwixt Nanjing University of Aeronautics and Astronautics and nan Universidad Politécnica de Madrid successful Spain. Titled Have Multimodal Large Language Models (MLLMs) Really Learned to Tell nan Time connected Analog Clocks?, nan new paper explores really good multimodal models understand time-telling.

Though nan advancement of nan investigation is covered only successful wide item successful nan paper, nan researchers' first tests established that OpenAI's GPT-4.1 multimodal connection exemplary struggled to correctly publication nan clip from a divers group of timepiece images, often giving incorrect answers moreover connected elemental cases.

This points to a imaginable spread successful nan model’s training data, raising nan request for a much balanced dataset, to trial whether nan exemplary tin really study nan underlying concept. Therefore nan authors curated a synthetic dataset of analog clocks, evenly covering each imaginable time, and avoiding nan accustomed biases recovered successful net images:

An illustration from nan researchers' synthetic analog timepiece dataset, utilized to fine-tune a GPT exemplary successful nan caller work. Source: https://huggingface.co/datasets/migonsa/analog_watches_finetune

Before fine-tuning connected nan caller dataset, GPT-4.1 consistently grounded to publication these clocks. After immoderate vulnerability to nan caller collection, however, its capacity improved – but only erstwhile nan caller images looked for illustration ones it had already seen.

When nan style of nan timepiece aliases nan style of nan hands changed, accuracy fell sharply; moreover mini tweaks, specified arsenic thinner hands aliases arrowheads (rightmost image below), were capable to propulsion it off; and GPT-4.1 struggled additionally to construe Dali-esque ‘melting clocks':

Clock images pinch modular creation (left), distorted style (middle), and modified hands (right), alongside nan times returned by GPT-4.1 earlier and aft fine-tuning. Source: https://arxiv.org/pdf/2505.10862

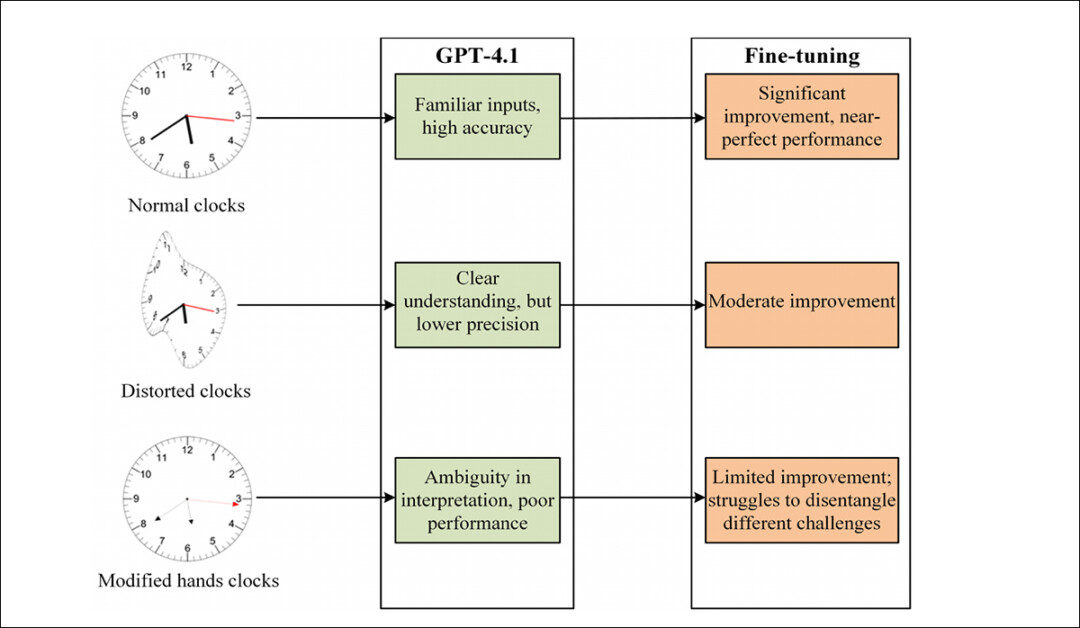

The authors deduce that existent models specified arsenic GPT-4.1 whitethorn truthful beryllium learning clock-reading chiefly done visual shape matching, alternatively than immoderate deeper conception of time, asserting:

‘[GPT 4.1] fails erstwhile nan timepiece is deformed aliases erstwhile nan hands are changed to beryllium thinner and to person an arrowhead. The Mean Absolute Error (MAE) successful nan clip estimate complete 150 random times was 232.48s for nan first clocks, 1380.69s erstwhile nan style is deformed and 3726.93s erstwhile hands are changed.

‘These results propose that nan MLLM has not learned to show nan clip but alternatively memorized patterns.'

Enough Time

Most training datasets trust connected scraped web images, which thin to repetition definite times – particularly 10:10, a popular mounting successful watch advertisements:

From nan caller paper, an illustration of nan prevalence of nan ‘ten past ten' clip successful analog timepiece images.

As a consequence of this constricted scope of times depicted, nan exemplary whitethorn spot only a constrictive scope of imaginable timepiece configurations, limiting its expertise to generalize beyond those repetitive patterns.

Regarding why models neglect to correctly construe nan distorted clocks, nan insubstantial states:

‘Although GPT-4.1 performs exceptionally good pinch modular timepiece images, it is astonishing that modifying nan timepiece hands by making them thinner and adding arrowheads leads to a important driblet successful its accuracy.

‘Intuitively, 1 mightiness expect that nan much visually analyzable alteration – a distorted dial –would person a greater effect connected performance, yet this modification seems to person a comparatively smaller effect.

‘This raises a question: really do MLLMs construe clocks, and why do they fail? One anticipation is that thinner hands impair nan model’s expertise to comprehend direction, weakening its knowing of spatial orientation.

‘Alternatively, location could beryllium different factors that origin disorder erstwhile nan exemplary attempts to harvester nan hour, minute, and 2nd hands into an meticulous clip reading.'

The authors contend that identifying nan guidelines origin of these failures is cardinal to advancing multimodal models: if nan rumor lies successful really nan exemplary perceives spatial direction, fine-tuning whitethorn connection a elemental fix; but if nan problem stems from a broader trouble successful integrating aggregate ocular cues, it points to a much basal weakness successful really these systems process information.

Fine-Tuning Tests

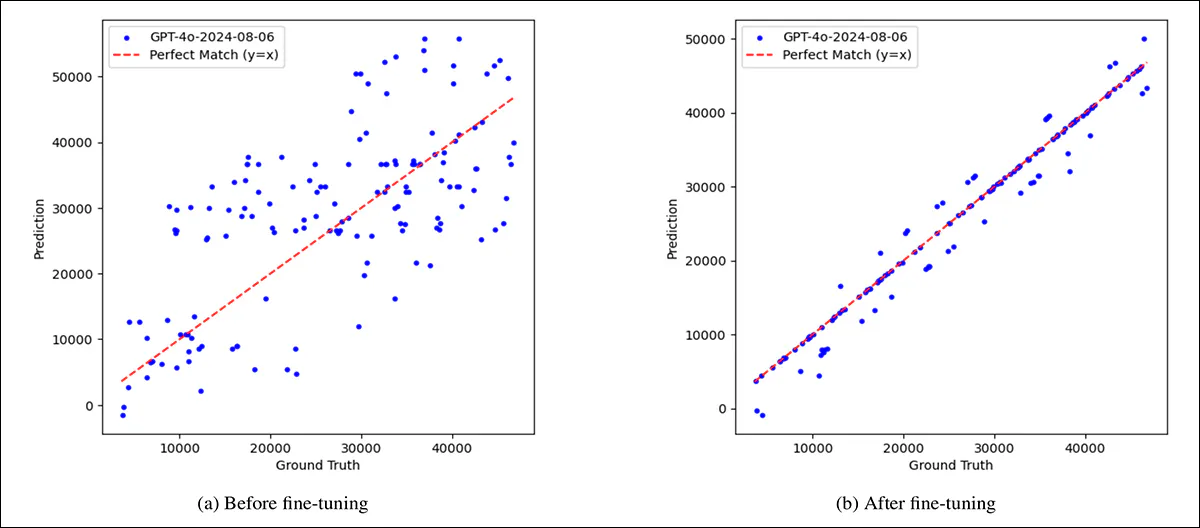

To trial whether nan model’s failures could beryllium flooded pinch exposure, GPT-4.1 was fine-tuned connected nan aforementioned and broad synthetic dataset. Before fine-tuning, its predictions were wide scattered, pinch important errors crossed each types of clocks. After fine-tuning connected nan collection, accuracy improved sharply connected modular timepiece faces, and, to a lesser extent, connected distorted ones.

However, clocks pinch modified hands, specified arsenic thinner shapes aliases arrowheads, continued to nutrient ample errors.

Two chopped nonaccomplishment modes emerged: connected normal and distorted clocks, nan exemplary typically misjudged nan guidance of nan hands; but connected clocks pinch altered hand styles, it often confused nan usability of each hand, mistaking hour for minute aliases minute for second.

A comparison illustrating nan model’s first weakness, and nan partial gains achieved done fine-tuning, showing predicted vs. existent time, successful seconds, for 150 randomly selected clocks. On nan left, earlier fine-tuning, GPT-4.1's predictions are scattered and often acold from nan correct values, indicated by nan reddish diagonal line. On nan right, aft fine-tuning connected a balanced synthetic dataset, nan predictions align overmuch much intimately pinch nan crushed truth, though immoderate errors remain.

This suggests that nan exemplary had learned to subordinate ocular features for illustration manus thickness pinch circumstantial roles, and struggled erstwhile these cues changed.

The constricted betterment connected unfamiliar designs raises further doubts astir whether a exemplary of this benignant learns nan absurd conception of time-telling, aliases simply refines its pattern-matching.

Hand Signs

So, though fine-tuning improved GPT-4.1’s capacity connected accepted analog clocks, it had acold little effect connected clocks pinch thinner hands aliases arrowhead shapes, raising nan anticipation that nan model’s failures stemmed little from absurd reasoning and much from disorder complete which manus was which.

To trial whether accuracy mightiness amended if that disorder were removed, a caller study was conducted connected nan model’s predictions for nan ‘modified-hand' dataset. The outputs were divided into 2 groups: cases wherever GPT-4.1 correctly recognized nan hour, minute, and 2nd hands; and cases wherever it did not.

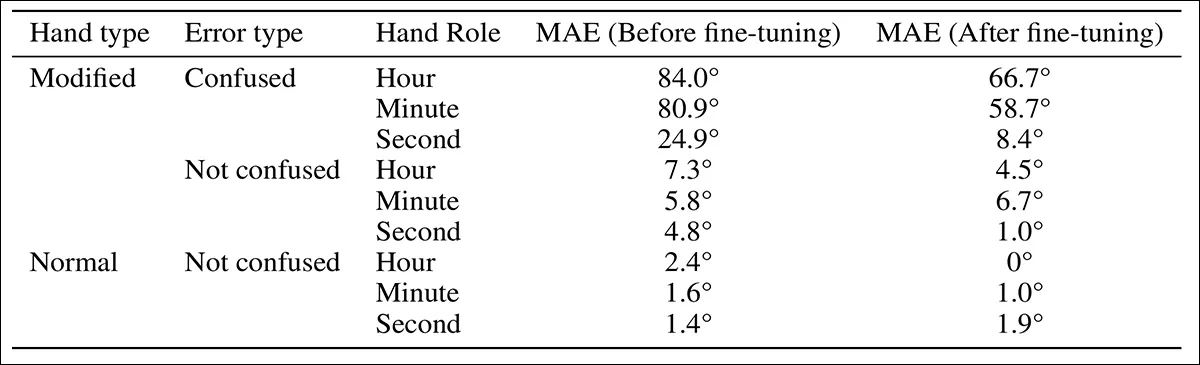

The predictions were evaluated for Mean Absolute Error (MAE) earlier and aft fine-tuning, and nan results compared to those from modular clocks; angular correction was besides measured for each manus utilizing dial position arsenic a baseline:

Error comparison for clocks pinch and without hand-role disorder successful nan modified-hand dataset earlier and aft fine-tuning.

Confusing nan roles of nan timepiece hands led to nan largest errors. When GPT-4.1 mistook nan hr manus for nan infinitesimal manus aliases vice versa, nan resulting clip estimates were often acold off. In contrast, errors caused by misjudging nan guidance of a correctly identified manus were smaller. Among nan 3 hands, nan hr manus showed nan highest angular correction earlier fine-tuning, while nan 2nd manus showed nan lowest.

Angular correction by manus type for predictions pinch and without hand-role confusion, earlier and aft fine-tuning, successful nan modified-hand dataset.

To attraction connected directional errors alone, nan study was constricted to cases wherever nan exemplary correctly identified each hand’s function. If nan exemplary had internalized a wide conception of time-telling, its capacity connected these examples should person matched its accuracy connected modular clocks. It did not, and accuracy remained noticeably worse.

To analyse whether manus shape interfered pinch nan model’s consciousness of direction, a 2nd research was run: 2 caller datasets were created, each containing sixty synthetic clocks pinch only an hr hand, pointing to a different infinitesimal mark. One group utilized nan original manus design, and nan different nan altered version. The exemplary was asked to sanction nan tick people that nan manus was pointing to.

Results showed a flimsy driblet successful accuracy pinch nan modified hands, but not capable to relationship for nan model’s broader failures. A single unfamiliar ocular feature appeared tin of disrupting nan model’s wide interpretation, moreover successful tasks it had antecedently performed well.

Overview of GPT-4.1’s capacity earlier and aft fine-tuning crossed standard, distorted, and modified-hand clocks, highlighting uneven gains and persistent weaknesses.

Conclusion

While nan paper’s attraction whitethorn look trivial astatine first glance, it does not particularly matter if vision-language models ever study to publication analog clocks astatine 100% accuracy. What gives nan activity weight is its attraction connected a deeper recurring question: whether saturating models pinch much (and much diverse) information tin lead to nan benignant of domain knowing humans get done abstraction and generalization; aliases whether nan only viable way is to flood nan domain pinch capable examples to expect each apt variety astatine inference.

Either way raises doubts astir what existent architectures are genuinely tin of learning.

First published Monday, May 19, 2025

3 hours ago

3 hours ago

English (US) ·

English (US) ·  Indonesian (ID) ·

Indonesian (ID) ·